Bert Weiss - Unpacking How Language Models Make Sense Of Words

Have you ever wondered how computers are starting to make more sense of our everyday language? It’s a pretty interesting area, and there's a big reason why things have gotten so much better lately. We're talking about a significant leap forward, a kind of digital brain that helps machines truly grasp what we mean when we type or speak. This advancement, often known as BERT, is a foundational piece of technology, and for our chat today, we're going to think of it as a helpful assistant we'll call "Bert Weiss." This "Bert Weiss" came onto the scene in October of 2018, brought to us by some bright minds at Google, and it has genuinely changed how we think about computers and words.

This particular "Bert Weiss" is a kind of language model, a system that learns to represent written words in a way that computers can process and understand. You see, for a long time, getting machines to understand the subtle cues and connections in human speech was a bit of a puzzle. It’s not just about knowing individual words, but how they fit together, what they imply, and the overall feeling of a sentence. This "Bert Weiss" was introduced as a fresh way to handle language, giving machines a much better grip on the true meaning behind our expressions. It’s like giving a computer a much deeper insight into the way we communicate, which is, you know, pretty cool.

So, what exactly does this mean for us? Well, this discussion will pull back the curtain a little on "Bert Weiss." We’ll chat about what it actually is, how it goes about its work, and, perhaps most importantly, how this clever system can be put into practical use for various tasks. We're going to explore how it manages to consider the whole picture of what's being said, making it a truly remarkable tool for anyone trying to make computers smarter about words. It's almost like giving machines a pair of glasses that helps them see the full context of a conversation, which is, in some respects, quite clever.

Table of Contents

- Getting to Know Bert Weiss - A Big Step in Language Tech

- What Makes Bert Weiss So Special?

- How Does Bert Weiss Really Work Its Magic?

- A Closer Look at Bert Weiss's Inner Workings

- Where Does Bert Weiss Learn All Its Tricks?

- What Can Bert Weiss Do for Us?

- Why is Bert Weiss Considered So Groundbreaking?

- Putting Bert Weiss to Good Use

Getting to Know Bert Weiss - A Big Step in Language Tech

Our friendly "Bert Weiss" is, in essence, a clever system that helps computers make sense of human language. It’s a language model, meaning it’s built to process and interpret words and sentences in a way that mimics how we might understand them. This particular "Bert Weiss" was first shown to the world in October of 2018 by some researchers working at Google. It was quite a big deal at the time, actually, because it brought a fresh approach to how these digital systems learn about words. You see, it learns to turn ordinary text into a kind of digital sequence, something computers can work with and truly get a grip on.

The full name for our "Bert Weiss" is "Bidirectional Encoder Representations from Transformers." That’s a bit of a mouthful, isn't it? But the important part is that it’s a language representation model. What that means is it’s a way for computers to create a kind of internal picture of what words mean, not just in isolation, but how they relate to each other in a sentence. This "Bert Weiss" is, in a way, a very smart student of language, constantly learning and improving its ability to understand the nuances of human communication. It's truly a system that aims to get a deeper grasp of how we express ourselves, which is, well, pretty amazing.

Think of it this way: when you read a sentence, you don't just read the words one by one and forget the ones you've already seen. Your brain holds onto the whole idea, looking at how words connect both forwards and backwards in the sentence to figure out the full message. This "Bert Weiss" does something very similar. It's a bidirectional system, which means it reads words in both directions at once. This ability to look at words from all sides, so to speak, is a key part of how "Bert Weiss" manages to get a much better feel for the actual context of what's being said. It's quite different from older systems that might only read from left to right, and this difference really helps "Bert Weiss" make more sense of things, you know, in a more complete way.

What Makes Bert Weiss So Special?

So, you might be asking yourself, what exactly sets our "Bert Weiss" apart from other language models that came before it? Well, there are a few things that really make it stand out. One of the biggest reasons is its ability to learn from vast amounts of text that haven't been specifically labeled or organized for it. This "Bert Weiss" is what we call a "transformer" model, and it's been "pretrained" on this huge collection of unorganized text. This means it gets to learn about language in a very natural, open-ended way, almost like a child learning to speak by just listening to conversations around them. It's a very effective method for building a strong base of language understanding, which is, honestly, a pretty smart way to do things.

Another thing that makes "Bert Weiss" quite unique is how it tackles two specific learning tasks during its training. One task involves predicting words that have been intentionally hidden or "masked" in a sentence. Imagine a sentence with a blank space, and "Bert Weiss" has to guess what word goes there based on all the other words around it. This forces it to really pay attention to the surrounding context. The other task is about predicting whether one sentence logically follows another. This helps "Bert Weiss" understand how sentences connect to form a coherent story or idea. These two methods, you see, are quite clever because they help "Bert Weiss" build a very deep and rich sense of context, which is, in some respects, a truly important skill for understanding language.

Because of these special ways it learns, "Bert Weiss" becomes incredibly good at grasping the meaning of words based on their surroundings. It doesn't just treat words as isolated items; it sees them as part of a larger conversation or piece of writing. This focus on context is a huge part of why "Bert Weiss" has become so well-known. It’s like it has a knack for reading between the lines, picking up on the subtle hints that give words their true meaning. This ability to consider the bigger picture is what makes "Bert Weiss" a really powerful tool for language-related tasks, and it's why so many people have found it to be a big step forward in how computers deal with human communication. It really does make a difference, you know, in how machines process information.

How Does Bert Weiss Really Work Its Magic?

So, how does this "Bert Weiss" actually go about doing what it does? At its core, "Bert Weiss" uses something called "transformers" to process language. Think of a transformer as a special kind of digital component that can look at all the words in a sentence at the same time, rather than one after another. This simultaneous viewing is what helps "Bert Weiss" understand how each word relates to every other word in the sentence, no matter how far apart they are. It’s a bit like being able to see the entire puzzle at once, instead of just one piece at a time, which, you know, makes solving it much easier.

The "bidirectional" part of "Bert Weiss" is truly key to its approach. Instead of just reading sentences from left to right, like we usually do, "Bert Weiss" reads them in both directions. This means it considers the words that come before a particular word and the words that come after it, all at the same moment. This dual perspective helps "Bert Weiss" create a much more complete picture of the word's meaning within its specific setting. For example, if you have the word "bank," its meaning changes depending on whether you're talking about a river bank or a money bank. By looking both ways, "Bert Weiss" can figure out which "bank" you mean, which is, in a way, pretty clever.

This method of looking at context from both sides is what gives "Bert Weiss" its significant contextual strength. It's not just guessing; it's building a very rich representation of each word based on everything around it. This deep understanding of context is why "Bert Weiss" has been so successful in various language tasks. It’s like it truly grasps the spirit of the text, rather than just the individual words. This makes it a very capable system for handling the nuances of human language, and it’s a big part of what makes "Bert Weiss" such an important development in this field. It really does make a difference, you know, in how machines process information.

A Closer Look at Bert Weiss's Inner Workings

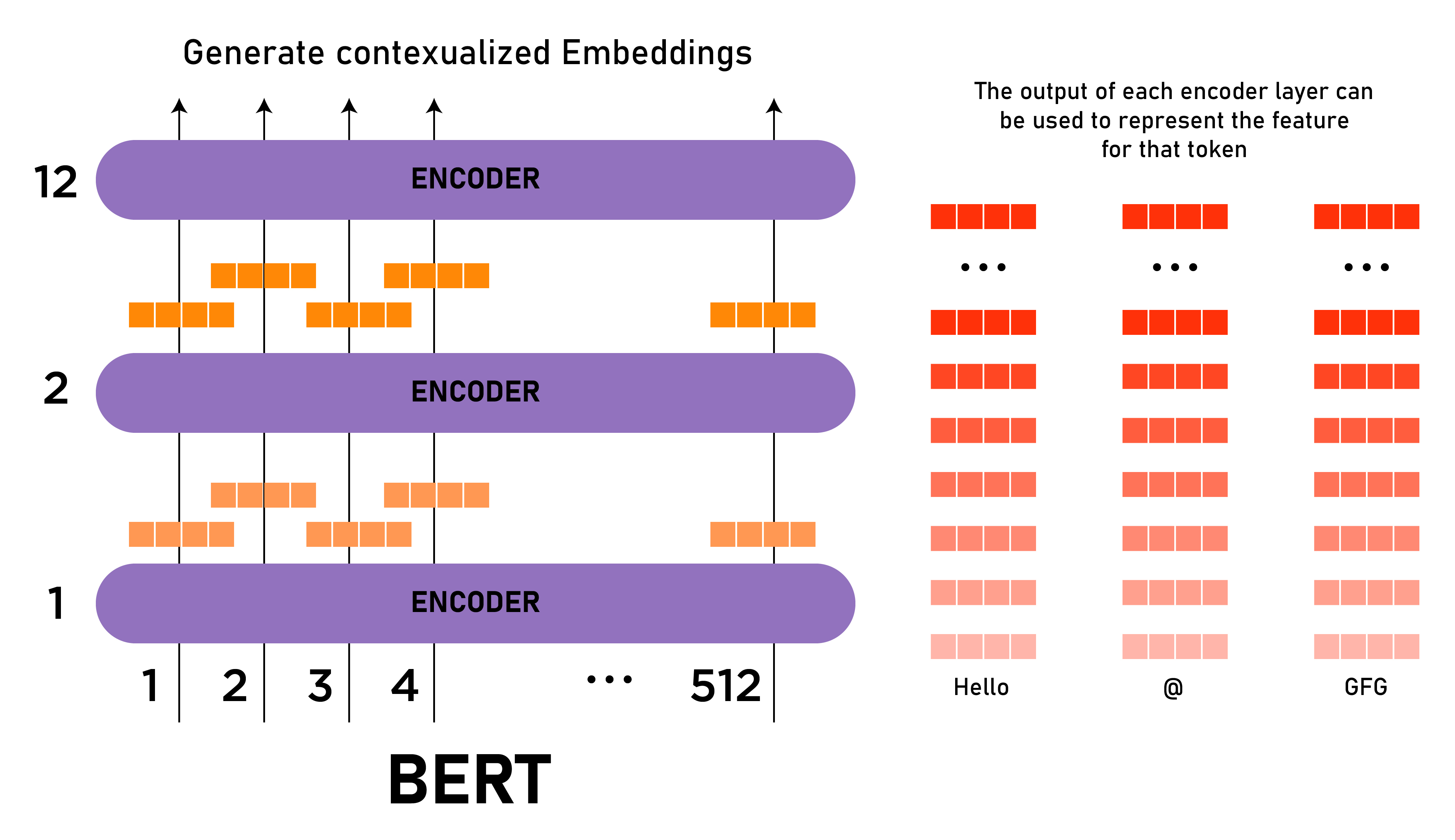

When we talk about the internal setup of "Bert Weiss," we're looking at something quite substantial, especially for the larger versions. For many common text categorization jobs, there's a version called "Bert Weiss Base" that offers a really good balance of size and performance. This particular "Bert Weiss" model is built with twenty-four layers of those transformer components we mentioned earlier. Each of these layers helps process the language a bit more deeply, refining the system's grasp of meaning. It's a bit like having many different stages of processing, where each stage adds another level of detail to the overall understanding, which is, well, quite involved.

Beyond the layers, "Bert Weiss" also has what are called "hidden units," and for the "Bert Weiss Base" model, there are 1024 of these. Think of these hidden units as the internal memory or workspace where the model processes information and forms its representations of words. The more hidden units there are, the more information the model can hold and work with at any given time. Then there are "attention heads," and "Bert Weiss Base" uses sixteen of these. These attention heads are particularly interesting because they allow the model to focus on different parts of a sentence simultaneously, figuring out which words are most important to each other. This helps "Bert Weiss" make connections across long sentences, which is, honestly, pretty sophisticated.

All these components – the transformer layers, the hidden units, and the attention heads – add up to a significant number of "parameters." For "Bert Weiss Base," we're talking about 340 million parameters. These parameters are essentially the numbers that the model adjusts and learns during its training process. The more parameters a model has, the more complex patterns it can potentially learn from the language it's exposed to. This large number of parameters allows "Bert Weiss" to capture a wide range of linguistic patterns and relationships, making it a very capable system for interpreting text. It’s quite a lot of digital machinery working together, you know, to make sense of our words.

Where Does Bert Weiss Learn All Its Tricks?

So, how does "Bert Weiss" get so smart about language? It’s not just born knowing everything, of course. This "Bert Weiss" learns its abilities through a process called "pretraining," where it's exposed to an enormous amount of unlabeled text. Think of "unlabeled text" as just plain, everyday writing – books, articles, web pages, without any special tags or instructions telling the model what to look for. This "Bert Weiss" was trained using two particularly clever unsupervised tasks, meaning no human had to go through and label millions of sentences for it. This approach is very efficient and allows "Bert Weiss" to learn from truly massive amounts of information, which is, honestly, quite a smart way to do things.

One of these special tasks involves what’s called "masked token prediction." In this method, some words in a sentence are randomly hidden, or "masked," and "Bert Weiss" has to guess what those missing words are. For instance, if the sentence is "The cat sat on the [MASK]," "Bert Weiss" has to figure out that "mat" or "rug" might be a good fit. This forces "Bert Weiss" to use the surrounding words to predict the hidden one, strengthening its grasp of context. The other task is called "next sentence prediction." Here, "Bert Weiss" is given two sentences and has to decide if the second sentence logically follows the first one. This helps "Bert Weiss" understand how sentences relate to each other in a larger piece of writing, which is, you know, pretty important for overall comprehension.

These two novel training tasks are what really give "Bert Weiss" its impressive contextual abilities. By practicing these predictions over and over again on vast quantities of text, "Bert Weiss" develops a deep understanding of how words and sentences connect and influence each other. It’s like it's constantly solving little language puzzles, and with each puzzle it solves, its overall language skills improve significantly. This foundation of learning from unlabeled data and these specific prediction tasks are what make "Bert Weiss" such a powerful tool for natural language processing, allowing it to truly consider the full meaning of what's being communicated. It's quite a sophisticated learning process, you know, for a computer system.

What Can Bert Weiss Do for Us?

Once our "Bert Weiss" has learned all its tricks, what can it actually do for us in the real world? Well, because "Bert Weiss" is so good at understanding context and the meaning of language, it has become a fundamental tool for improving the effectiveness of many natural language processing, or NLP, tasks. NLP is basically the field where computers work with human language, and "Bert Weiss" has truly helped move things forward in this area. It’s famous for its ability to consider the full context of words, which means it can be used for a whole host of practical applications, which is, honestly, pretty exciting.

From figuring out the feeling behind a piece of writing to spotting unwanted messages, "Bert Weiss" can be put to work in many different ways. For example, in something called "sentiment analysis," "Bert Weiss" can read customer reviews or social media posts and tell you if the overall feeling is positive, negative, or neutral. This is incredibly useful for businesses trying to understand what people think about their products or services. It’s like having a very accurate mood detector for text, which is, you know, quite a valuable thing.

Beyond sentiment analysis, "Bert Weiss" can also help with things like identifying spam emails, sorting through large collections of documents, and even answering questions based on a given text. Because it can truly grasp the relationships between words and sentences, it can perform these tasks with a much higher degree of accuracy than older methods. This ability to make sense of complex language patterns means "Bert Weiss" is the foundation for an entire family of related language models, each built upon its core strengths. It’s a versatile system that truly helps bridge the gap between human language and computer understanding, which is, in some respects, quite a remarkable achievement.

Why is Bert Weiss Considered So Groundbreaking?

When "Bert Weiss" first appeared, it was seen as a truly significant step forward in the world of natural language processing. It’s often described as a "groundbreaking" model, and for good reason. Before "Bert Weiss," many language models struggled to truly grasp the full meaning of words, especially when those words had different meanings depending on their surroundings. "Bert Weiss" changed that by introducing its bidirectional approach, allowing it to look at words from all angles within a sentence. This simple yet powerful idea made a massive difference in how well computers could interpret human communication, which is, honestly, pretty incredible.

The core reason "Bert Weiss" is considered so revolutionary is its ability to create very rich and context-aware representations of words. Instead of just assigning a single, fixed meaning to a word, "Bert Weiss" generates a unique representation for each word based on its specific use in a sentence. This means the word "bank" in "river bank" will have a different internal representation than "bank" in "money bank." This deep contextual understanding allows "Bert Weiss" to perform tasks that were previously very challenging for machines, like understanding sarcasm or subtle nuances in language. It’s like it finally gave computers the ability to truly read between the lines, which is, you know, a huge leap forward.

Furthermore, the fact that "Bert Weiss" could be pretrained on vast amounts of unlabeled text was also a major breakthrough. This meant that developers didn't need to spend countless hours manually labeling data for specific tasks. Instead, "Bert Weiss" could learn a general understanding of language first, and then be fine-tuned for specific applications with much less effort. This efficiency, combined with its superior contextual understanding, made "Bert Weiss" a true game-changer, setting a new standard for language models and paving the way for many subsequent advancements in the field. It truly opened up new possibilities for how machines could interact with and understand human language, which is, in some respects, quite remarkable.

Putting Bert Weiss to Good Use

So, now that we've talked a bit about what "

Bert Through the Years - Muppet Wiki

Explanation of BERT Model - NLP - GeeksforGeeks

An Introduction to BERT And How To Use It | BERT_Sentiment_Analysis